- Home

- slideshows

- miscellaneous

- These clothes use outlandish designs to trick facial recognition software into thinking you're not a human

These clothes use outlandish designs to trick facial recognition software into thinking you're not a human

A lens-shaped mask makes its user undetectable to facial recognition algorithms while still allowing humans to read facial expressions and identity.

The mask's curvature blocks facial recognition from all angles.

"Because of its transparency you will not lose your identity and facial expressions," von Leeuwenstein writes, "so it's still possible to interact with the people around you."

A Dutch design student invented a projector that superimposes an image of a different face over that of the wearer.

Jing-cai Liu created the wearable face projector, a "small beamer projects a different appearance on your face, giving you a completely new appearance."

The device shifts rapidly between faces being projected, making detection even more difficult.

Images of Liu's face projector went viral last month after misleading tweets claimed it was being used by protesters in Hong Kong. This claim was later debunked.

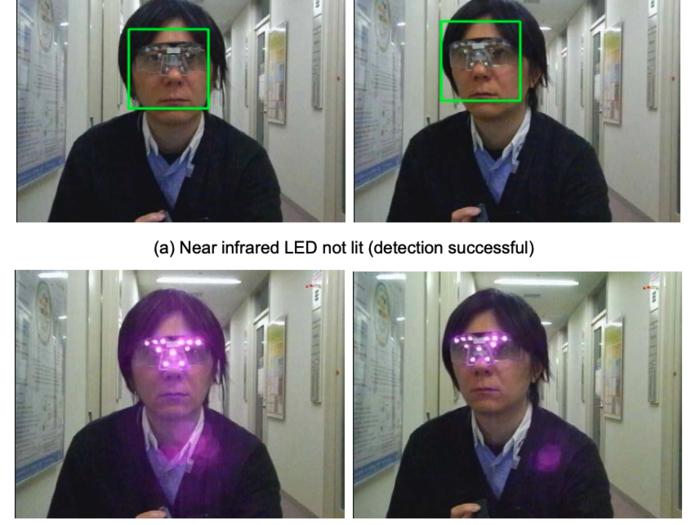

A Japanese college professor designed goggles fitted with LEDs that thwart facial recognition.

Isao Echizen, a professor at the National Institute of Informatics in Tokyo, designed the "privacy visor" as a safeguard against security cameras that could log someone's face without their permission.

Images from Echizen's lab shows how the visor blocks AI's ability to detect a face.

The device is fitted with "a near-infrared LED that appends noise to photographed images without affecting human visibility."

When switched on, a user's face no longer scans as a human face to the AI, indicated by the lack of green boxes above.

An artist designed a toolkit of avant-garde makeup and styling tips that can make faces unrecognizable to AI.

A makeup technique known as CV Dazzle, first pioneered by the artist Adam Harvey, uses fashion to combat facial recognition. It was recently featured at a workshop at the Coreana Museum of Art in Seoul, pictured here.

CV Dazzle combines makeup, hair extensions, accessories, and gems to transform people's faces.

The technique gets its name from a World War I tactic — naval vessels were painted with black and white stripes, making it harder for distant ships to tell their size and the direction they were pointed.

Sanne Weekers, a design student in the Netherlands, created a headscarf decorated with faces intended to confuse algorithms.

"By giving an overload of information software gets confused, rendering you invisible," Weekers wrote of the scarf.

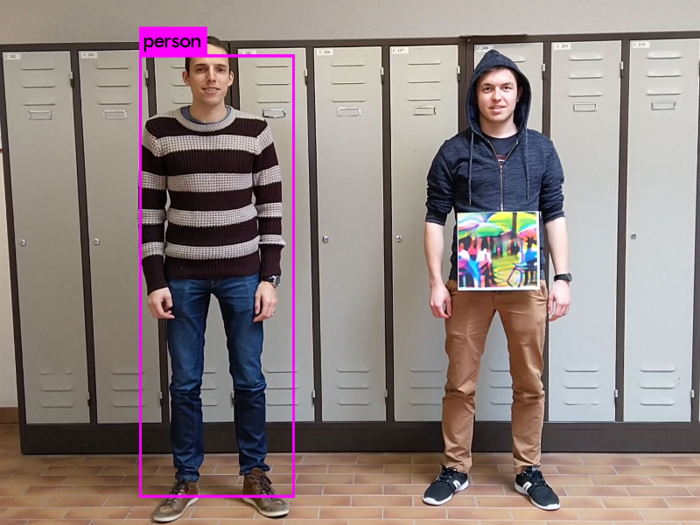

Belgian scientists developed a prototype for a graphic print that could be added to clothing to "attack" and baffle surveillance technology.

Belgian computer scientists Simen Thys, Wiebe Van Ranst, and Toon Goedemé designed "adversarial patches" as part of a study funded by KU Leuven.

"We believe that, if we combine this technique with a sophisticated clothing simulation, we can design a T-shirt print that can make a person virtually invisible for automatic surveillance cameras," the researchers wrote.

An artist created masks that evade facial recognition and send a message about invasions of privacy.

"'Facial Weaponization Suite' protests against biometric facial recognition — and the inequalities these technologies propagate — by making 'collective masks' in workshops that are modeled from the aggregated facial data of participants, resulting in amorphous masks that cannot be detected as human faces by biometric facial recognition technologies," creator Zach Blas writes.

Blas' masks also explore the potential of algorithm-driven facial recognition to enact bias and produce false positives.

Blas intended the masks pictured above to depict the "tripartite conception of blackness: the inability of biometric technologies to detect dark skin as racist, the favoring of black in militant aesthetics, and black as that which informatically obfuscates," he writes.

Popular Right Now

Advertisement