The five-letter word ‘Speed’ is as prized a commodity as gold in financial markets across the globe. Faster is access to the market information, faster can one put into action a pre-programmed trading strategy to execute a greater volume of trades, efficiently and swifter than what a single dealer at a trading desk can do.

The five-letter word ‘Speed’ is as prized a commodity as gold in financial markets across the globe. Faster is access to the market information, faster can one put into action a pre-programmed trading strategy to execute a greater volume of trades, efficiently and swifter than what a single dealer at a trading desk can do. While much has evolved since the time of Reuters, which used pigeons to disseminate information to its clients about stocks traded in the Paris Stock Exchange faster than the post train, the need for ‘speed’ in the quest for greater profits has continued to remain a constant.

While the open outcry trading in the pit gave way to manual trading using computers, the next inflection in the trading evolution is what we are experiencing today with ‘Algorithms’ that are programmed to release orders and execute trades.

With the advent of low latency trading architecture, ‘speed’ is that deciding factor that separates profits from the ‘BIG’ profits. More importantly, the same can also be attributed to losses as well. Either ways, race to zero latency has gone very far and now is the time to re-evaluate how fast is fast enough.

The more complex a trading system, greater the risk

As much as Algorithmic-based programmed trading can be a boon, in terms of increasing the probability of greater profits, the collateral damage that it can unleash, if implemented without a proper risk management apparatus, can be quite catastrophic.

Organizations such as Deutsche Bank, Knight Capital, Goldman Sachs and Credit Suisse serve as examples that testify the fact that increasingly complicated trading systems have given rise to an increasing likelihood of inadvertent errors (multi million to multi billion dollars) that cause wealth erosion.

With the evolution of markets and trading systems nature of risk has also come a long way. While systemic risks continue to remain the same for manual rule based traders and mechanized traders, the impact of operational or system related risk is far more pronounced for the latter.

Justifiably so, markets regulators and exchanges foresee the looming threat of such evolved trading methodologies and have called for greater circumspection. While the process of periodic audits and existing regulations pertaining to

Imperative for traders and software programmers to be on the same page

Algo trading entails strategies formulated by traders but eventually executed by software programmers. Coming from disparate fields, the lack of common knowledge or cross functionality is one of the biggest challenges to overcome. Uploading the wrong trading modules in the Complex Event Processing (CEP) engines by the software programmer can lead to adverse consequences on the trading front. Thorough stress testing of the Algo is imperative before it gets live in a market.

Primary risk management systems are typically integrated within the trading platforms and may fail to spot the programming gaps, stale data or any other corner scenarios. To ensure proper functioning of the automated trading system, different technological components including risk management must function well in real time.

With so many changes happening within the trading system to optimize latency, enhanced algos logic changes, it may not be possible to validate performance of risk management in live markets comprehensively. And sometimes it does so happen that risk management system may fail without even the algo trader being aware of it.

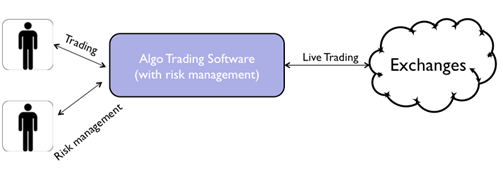

The flow of a single trading and risk management system is as shown below. Same software is used for trading and risk engine. This works well in 99.99% cases but in rare cases, it fails and algos can go haywire and cause market disruptions.

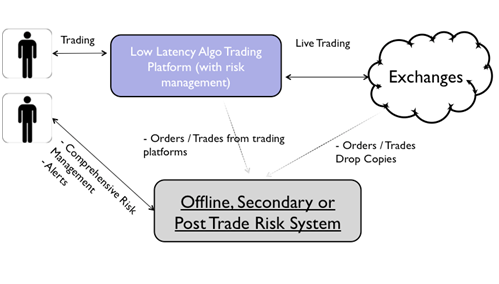

To address this problem, a Secondary Risk Management System (SRMS) can be very useful by acting as a second line of defense to safeguard against issues in primary system. This workflow is elaborated below.

Such offline system will not reduce the latency of live trading. All the orders, trades, modifications etc. will be captured in the offline risk management system which could be used to do the following,

- Do all the risk checks primary system is meant to be doing. Act as a second line of defense and provide alerts within microseconds. Such a system for example could have saved Knight capital’s $400mn loss in 2012 as it had taken them 45 minutes to realize what went wrong and rectify it. A secondary risk management system could have caught it within seconds.

- Perform more comprehensive risk checks over and above the exchange/regulator mandated checks like Greeks’ exposure (gamma, vega, theta, etc.) for options, margins utilized, and various other risk parameters.

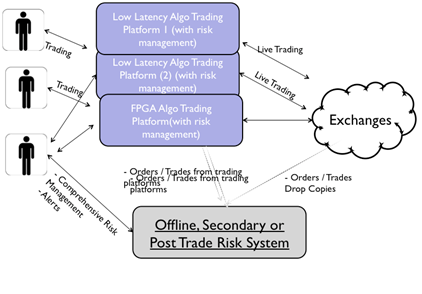

- Aggregate the risk checks across the servers, various algo trading software (including FPGA driven), etc., as depicted below. This will lead to a firm wide risk management, which is not possible today otherwise. The aggregation process is depicted in the image below.

SRMS when implemented in the existing set up can go a long way in instilling confidence among all market participants. After all, one develops the confidence of stepping on the accelerator of a car only if the safety measures are in place, brakes are fool proof and the back up hand bake is there too.

Time for India to accelerate.

(Kunal Nandwani is Founder and CEO of uTrade Solutions)

(Image: bankers-anonymous.com)